Generative Adversarial Networks (GANs) have revolutionized image synthesis by enabling the creation of highly realistic images from random noise. A prominent example is the StyleGAN architecture developed by NVIDIA, which generates photorealistic human faces with remarkable detail and diversity. StyleGAN leverages a novel style-based generator design that controls visual attributes at different scales, enhancing the quality and variety of synthesized images. Another key application of GANs in image synthesis is image-to-image translation, exemplified by the Pix2Pix model. Pix2Pix uses paired training data to convert images from one domain to another, such as turning sketches into colored photographs or transforming satellite images into maps. This capability highlights GANs' power to learn complex mappings between different image domains, facilitating advancements in computer graphics and visual content creation.

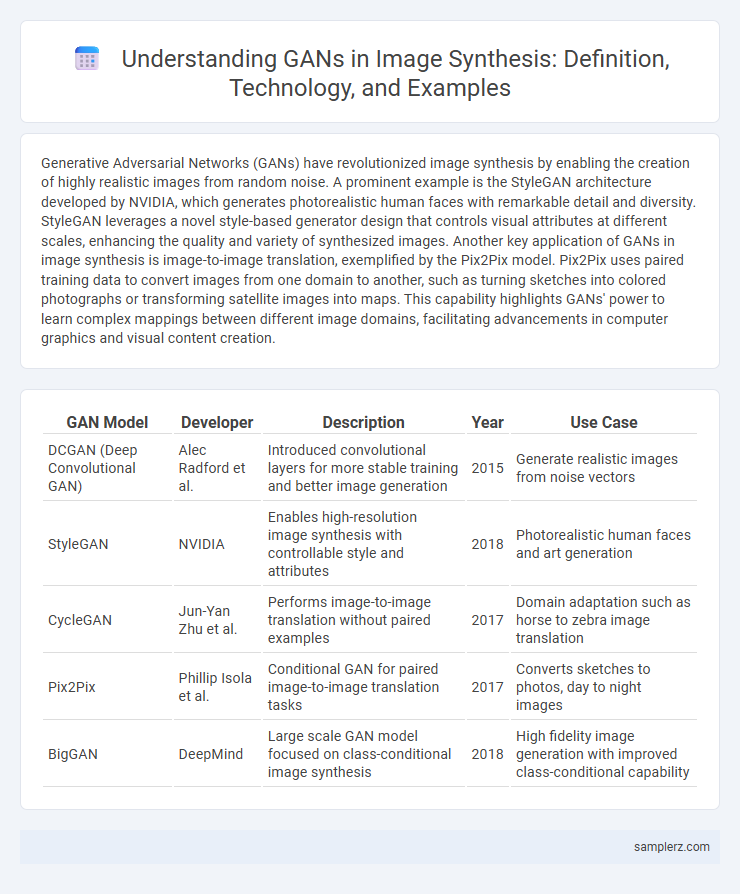

Table of Comparison

| GAN Model | Developer | Description | Year | Use Case |

|---|---|---|---|---|

| DCGAN (Deep Convolutional GAN) | Alec Radford et al. | Introduced convolutional layers for more stable training and better image generation | 2015 | Generate realistic images from noise vectors |

| StyleGAN | NVIDIA | Enables high-resolution image synthesis with controllable style and attributes | 2018 | Photorealistic human faces and art generation |

| CycleGAN | Jun-Yan Zhu et al. | Performs image-to-image translation without paired examples | 2017 | Domain adaptation such as horse to zebra image translation |

| Pix2Pix | Phillip Isola et al. | Conditional GAN for paired image-to-image translation tasks | 2017 | Converts sketches to photos, day to night images |

| BigGAN | DeepMind | Large scale GAN model focused on class-conditional image synthesis | 2018 | High fidelity image generation with improved class-conditional capability |

Introduction to GANs in Image Synthesis

Generative Adversarial Networks (GANs) revolutionize image synthesis by enabling machines to generate realistic images through a competitive learning process between a generator and a discriminator. In this setup, the generator creates images while the discriminator evaluates their authenticity, driving continuous improvement in image quality. Popular applications include deepfake creation, artistic style transfer, and photo-realistic image generation in fields like gaming and virtual reality.

StyleGAN: Realistic Portrait Generation

StyleGAN, developed by NVIDIA, is a cutting-edge Generative Adversarial Network (GAN) renowned for producing highly realistic portrait images with exceptional detail and diversity. Leveraging a style-based generator architecture, StyleGAN enables fine control over visual attributes such as facial expressions, lighting, and hair, resulting in photorealistic human faces indistinguishable from real photographs. Its applications span from digital art and video game character creation to enhancing datasets for facial recognition systems, demonstrating significant advancements in AI-driven image synthesis.

CycleGAN: Image-to-Image Translation

CycleGAN excels in image synthesis through unpaired image-to-image translation, enabling the transformation of images from one domain to another without requiring paired training data. Its architecture uses two generator-discriminator pairs that enforce cycle consistency, ensuring the output image can be translated back to the original domain. Applications of CycleGAN include converting photographs to paintings, adapting season changes in images, and style transfer between different visual domains.

Pix2Pix: Conditional Image Generation

Pix2Pix is a prominent example of GANs applied in conditional image generation, enabling the transformation of input images into realistic outputs based on paired training data. It utilizes a conditional GAN architecture where the generator learns to map semantic labels or sketches to photorealistic images, enhancing applications in image-to-image translation tasks such as turning sketches into colorized photographs or maps into satellite images. Pix2Pix's ability to generate high-quality, context-aware images has made it a benchmark in the advancement of image synthesis technologies.

GauGAN: Semantic Image Synthesis

GauGAN leverages generative adversarial networks (GANs) to transform simple semantic layouts into photorealistic images, revolutionizing image synthesis. By interpreting segmentation labels, GauGAN generates highly detailed scenes, enabling artists and designers to create complex visuals efficiently. This technology exemplifies GAN's potential in bridging abstract concepts with vivid, realistic images.

BigGAN: High-Resolution Image Creation

BigGAN leverages large-scale generative adversarial networks to produce high-resolution, photorealistic images with remarkable detail and diversity. It utilizes class-conditional training and massive model capacity, enabling the synthesis of images at resolutions up to 512x512 pixels with improved fidelity. BigGAN's architecture employs hierarchical latent spaces and spectral normalization techniques, setting new benchmarks in image synthesis quality and stability.

StarGAN: Multi-Domain Image Transformation

StarGAN leverages GAN architecture for multi-domain image synthesis, enabling seamless transformation across multiple image attributes using a single model. Its unified framework conditions on domain labels to generate diverse and realistic images, outperforming traditional GANs limited to two-domain translation. This innovation significantly advances applications in facial attribute editing, style transfer, and cross-domain image synthesis.

Progressive GANs: Improved Image Quality

Progressive GANs enhance image synthesis by gradually increasing the resolution during training, resulting in higher-quality and more detailed images. This approach stabilizes the training process and reduces artifacts compared to traditional GAN architectures. Applications like high-resolution face generation and realistic artwork demonstrate the effectiveness of Progressive GANs in producing visually compelling outputs.

DeepFake Technology: Face Swapping and Editing

Generative Adversarial Networks (GANs) power DeepFake technology by enabling realistic face swapping and editing in images and videos. This technique synthesizes high-resolution facial features, preserving expressions and lighting conditions to create seamless, convincing alterations. Leading models like StyleGAN leverage GAN architectures to enhance the authenticity and detail in face manipulation tasks.

Art and Design: GANs for Artistic Image Creation

Generative Adversarial Networks (GANs) have revolutionized artistic image creation by enabling the generation of unique and intricate artworks that blend abstract and realistic styles. Platforms like DeepArt and Artbreeder utilize GANs to transform photographs into paintings resembling famous artists' styles or to create entirely novel digital art pieces. This technology enhances creative workflows, allowing artists and designers to explore new aesthetics and push the boundaries of visual expression.

example of GAN in image synthesis Infographic

samplerz.com

samplerz.com