Field Programmable Gate Arrays (FPGAs) serve as powerful hardware accelerators by providing customizable acceleration for diverse computing tasks. Their architecture allows parallel processing of data, enhancing performance in applications like machine learning, signal processing, and cryptography. Companies integrate FPGAs into data centers to offload compute-intensive workloads, reducing latency and improving energy efficiency. One prominent example of FPGA usage in hardware acceleration is Microsoft's Project Catapult, which integrates FPGAs within servers to optimize data center operations. This acceleration framework speeds up search algorithms, advertisements ranking, and AI inference, demonstrating significant gains in throughput. Data shows that FPGAs can deliver up to 4x performance improvement compared to CPU-only implementations in specific workloads.

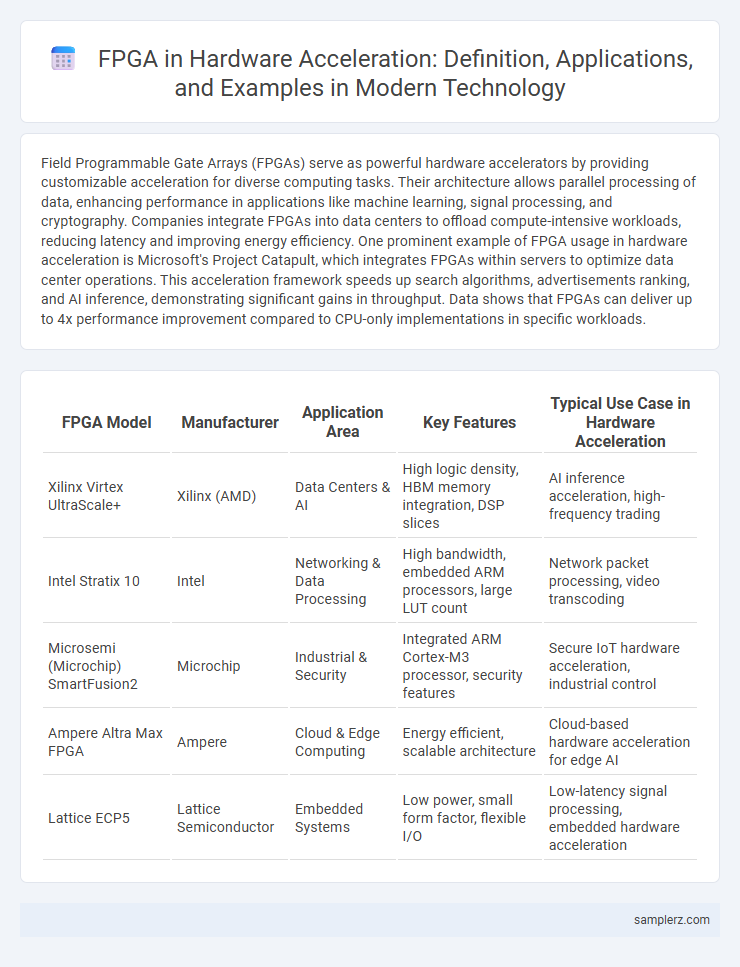

Table of Comparison

| FPGA Model | Manufacturer | Application Area | Key Features | Typical Use Case in Hardware Acceleration |

|---|---|---|---|---|

| Xilinx Virtex UltraScale+ | Xilinx (AMD) | Data Centers & AI | High logic density, HBM memory integration, DSP slices | AI inference acceleration, high-frequency trading |

| Intel Stratix 10 | Intel | Networking & Data Processing | High bandwidth, embedded ARM processors, large LUT count | Network packet processing, video transcoding |

| Microsemi (Microchip) SmartFusion2 | Microchip | Industrial & Security | Integrated ARM Cortex-M3 processor, security features | Secure IoT hardware acceleration, industrial control |

| Ampere Altra Max FPGA | Ampere | Cloud & Edge Computing | Energy efficient, scalable architecture | Cloud-based hardware acceleration for edge AI |

| Lattice ECP5 | Lattice Semiconductor | Embedded Systems | Low power, small form factor, flexible I/O | Low-latency signal processing, embedded hardware acceleration |

Introduction to FPGA in Hardware Acceleration

Field Programmable Gate Arrays (FPGAs) are integral to hardware acceleration by enabling customizable, parallel processing architectures that outperform traditional CPUs in specific computational tasks. Their reconfigurable logic blocks allow optimization for workloads such as machine learning inference, signal processing, and cryptographic algorithms, reducing latency and energy consumption. Major technology companies utilize FPGAs in data centers to accelerate AI workloads, demonstrating their effectiveness in enhancing computational efficiency and throughput.

Key Benefits of FPGA-Based Acceleration

FPGA-based hardware acceleration offers unparalleled parallel processing capabilities, significantly boosting performance in data-intensive applications such as AI inference and high-frequency trading. Its reconfigurable architecture allows for tailored optimization, reducing latency and energy consumption compared to fixed-function ASICs and general-purpose GPUs. This flexibility enables rapid deployment of custom algorithms, enhancing system efficiency and lowering operational costs in diverse technological environments.

Real-World Applications of FPGA in Data Centers

FPGAs are extensively utilized in data centers to accelerate machine learning inference, providing customizable parallel processing capabilities that significantly reduce latency and power consumption. Companies like Microsoft integrate FPGAs in their Azure infrastructure to optimize artificial intelligence workloads, enhancing real-time decision-making and large-scale data analytics. This hardware acceleration enables efficient handling of complex algorithms and high-throughput data streams, driving improved performance in cloud computing environments.

FPGA for Machine Learning Inference Acceleration

Field-Programmable Gate Arrays (FPGAs) enable customized hardware acceleration for machine learning inference by offering parallel processing capabilities and low latency. Companies like Xilinx and Intel deploy FPGAs in data centers to optimize deep learning model performance, reducing power consumption compared to traditional GPUs. This adaptability allows real-time processing of complex neural networks in applications such as image recognition, natural language processing, and autonomous systems.

Networking Acceleration Using FPGA

FPGA-based networking acceleration significantly enhances data packet processing by offloading compute-intensive tasks such as encryption, filtering, and pattern matching from the CPU. Major data centers integrate FPGAs to reduce network latency and increase throughput for applications like 5G infrastructure, cloud data routing, and real-time analytics. Companies like Xilinx and Intel provide adaptable FPGA solutions that enable programmable, high-speed packet processing tailored to evolving network protocols and traffic patterns.

FPGA in High-Frequency Trading Systems

FPGAs (Field-Programmable Gate Arrays) significantly enhance hardware acceleration in high-frequency trading (HFT) systems by enabling ultra-low latency data processing and order execution. Their reconfigurable architecture allows customization of trading algorithms directly on the hardware, reducing latency to microseconds compared to traditional CPU-based systems. Leading financial firms leverage FPGA platforms like Xilinx Alveo or Intel Stratix to optimize market data analysis and order routing, gaining a critical edge in milliseconds-sensitive trading environments.

Image and Video Processing with FPGA Acceleration

FPGA hardware acceleration significantly enhances image and video processing by enabling parallel data handling and real-time computation, crucial for high-resolution video streaming and complex image analysis. Advanced FPGAs integrate custom logic blocks and DSP slices, accelerating tasks like video encoding, decoding, and image filtering with reduced latency. Industry applications include surveillance systems, autonomous vehicles, and medical imaging, where FPGA-based acceleration optimizes performance and energy efficiency.

FPGA in Scientific Computing and Simulations

FPGA (Field-Programmable Gate Array) technology accelerates scientific computing by enabling customizable parallel processing architectures tailored to complex simulations. High-performance computing applications in physics, climate modeling, and molecular dynamics benefit from FPGA's low latency and high throughput, outperforming traditional CPUs and GPUs in specific tasks. The flexibility of FPGAs allows iterative algorithm optimizations that significantly enhance simulation speed and energy efficiency in scientific research.

FPGA Acceleration in Embedded Systems

FPGA acceleration in embedded systems enhances real-time data processing by offloading compute-intensive tasks from CPUs, significantly improving efficiency and reducing latency. Common applications include automotive driver assistance, industrial automation, and IoT devices, where FPGA's parallel processing capabilities optimize signal processing and machine learning workloads. Integration of FPGA in embedded platforms like Xilinx Zynq or Intel Stratix enables customizable hardware acceleration tailored to specific application requirements.

Future Trends in FPGA Hardware Acceleration

Emerging trends in FPGA hardware acceleration emphasize integration with AI workloads, leveraging adaptive architectures to optimize machine learning inference and training. Advances in high-level synthesis tools enhance programmability, enabling faster deployment of complex algorithms onto FPGA platforms. Future innovations include tighter coupling with cloud infrastructure and heterogeneous computing systems, driving unprecedented performance and energy efficiency in data centers.

example of FPGA in hardware acceleration Infographic

samplerz.com

samplerz.com