Tokenization in data protection replaces sensitive information with unique identification symbols called tokens, which retain essential data without exposing the original details. For example, in credit card processing, the card number is replaced with a token that can be used in a specific context but has no exploitable value outside of it. This method reduces the risk of data breaches by ensuring that sensitive information is not stored or transmitted in its original form. Healthcare organizations often use tokenization to protect patient information, where personal identifiers like Social Security numbers or medical record numbers are substituted with tokens. These tokens allow for secure data analysis and sharing without compromising patient privacy. The tokenization process ensures compliance with regulations such as HIPAA by minimizing the exposure of protected health information (PHI) during transactions.

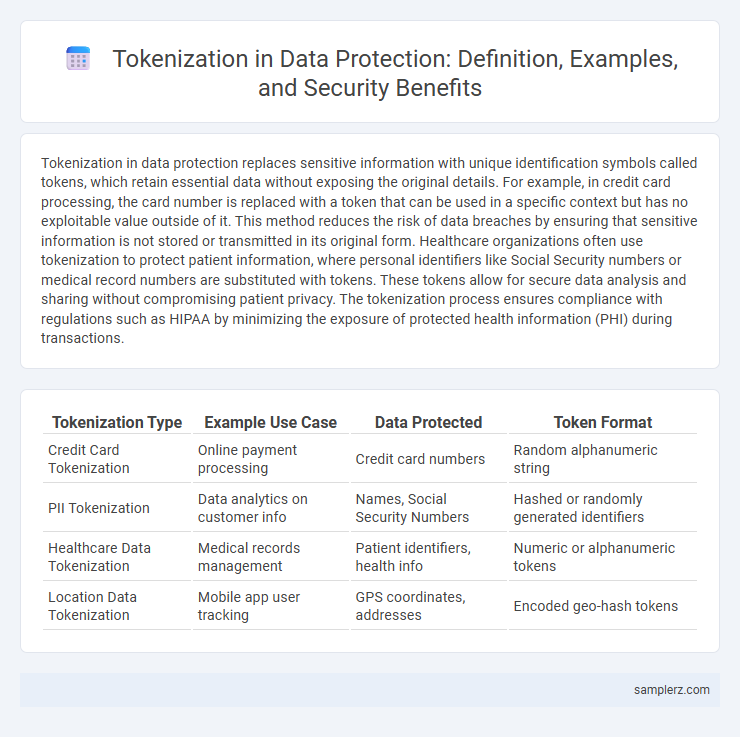

Table of Comparison

| Tokenization Type | Example Use Case | Data Protected | Token Format |

|---|---|---|---|

| Credit Card Tokenization | Online payment processing | Credit card numbers | Random alphanumeric string |

| PII Tokenization | Data analytics on customer info | Names, Social Security Numbers | Hashed or randomly generated identifiers |

| Healthcare Data Tokenization | Medical records management | Patient identifiers, health info | Numeric or alphanumeric tokens |

| Location Data Tokenization | Mobile app user tracking | GPS coordinates, addresses | Encoded geo-hash tokens |

Understanding Tokenization in Data Protection

Tokenization replaces sensitive data elements with non-sensitive equivalents called tokens, which retain essential information without revealing the original data. In payment processing, credit card numbers are tokenized to prevent unauthorized access, enhancing security and reducing compliance scope. This method minimizes data breach risks by ensuring that tokens cannot be reverse-engineered to retrieve the original sensitive data.

Tokenization vs. Encryption: Key Differences

Tokenization replaces sensitive data with non-sensitive placeholders called tokens, which have no exploitable value, enhancing data security by minimizing exposure. Encryption converts data into cipher text using algorithms and keys, requiring decryption to restore original information, whereas tokenization does not involve reversible algorithms. Unlike encryption, tokenization reduces the risk of data breaches by eliminating the storage of actual sensitive data, making it highly effective for protecting payment card information and personally identifiable information (PII).

How Tokenization Secures Payment Card Data

Tokenization secures payment card data by replacing sensitive card details, such as the primary account number (PAN), with unique, non-sensitive tokens that have no exploitable value. These tokens are stored in a secure token vault, while the actual card data remains protected and isolated from payment processing systems, minimizing the risk of data breaches. This process ensures compliance with PCI DSS requirements and significantly reduces the scope of security audits.

Tokenization in Healthcare: Protecting Patient Information

Tokenization in healthcare replaces sensitive patient information, such as Social Security numbers and medical record identifiers, with non-sensitive tokens, reducing the risk of data breaches. By decoupling actual patient data from its tokenized representations, healthcare organizations comply with HIPAA regulations and ensure secure data storage and transmission. This method enhances patient privacy while maintaining data usability for billing, analytics, and treatment processes.

Real-World Tokenization Use Cases in Retail

Retailers use tokenization to secure payment card data by replacing sensitive card numbers with unique tokens during transactions, reducing the risk of data breaches. Customer loyalty programs utilize tokenization to protect personal information, enabling safe tracking of rewards without exposing identities. Inventory systems implement tokenization to safeguard proprietary data, ensuring sensitive product details remain confidential throughout supply chains.

Cloud Security Enhancement with Tokenization

Tokenization enhances cloud security by replacing sensitive data, such as credit card numbers or personal identifiers, with unique tokens that retain format but eliminate exposure risks. These tokens are stored separately from the original data in secure, compliant vaults, minimizing breach impact and meeting regulations like PCI-DSS and GDPR. By integrating tokenization into cloud environments, organizations reduce attack surfaces and strengthen data privacy without compromising operational efficiency.

Tokenization for Compliance: PCI DSS and GDPR

Tokenization plays a crucial role in achieving compliance with PCI DSS and GDPR by replacing sensitive payment card and personal data with non-sensitive tokens. These tokens eliminate the need to store actual data, significantly reducing the risk of data breaches and minimizing the scope of compliance audits. Tokenization solutions ensure secure transaction processes while adhering to stringent regulatory standards, thereby protecting confidential information and enhancing overall data security.

Dynamic Tokenization in Mobile Payment Apps

Dynamic tokenization in mobile payment apps replaces sensitive payment information with unique, time-sensitive tokens for each transaction, significantly reducing the risk of data breaches. These tokens are generated in real-time and used only once, ensuring that intercepted data cannot be reused by attackers. This method enhances security by isolating actual card data from the transaction process, providing robust protection against fraud and unauthorized access.

Tokenization in Identity and Access Management

Tokenization in Identity and Access Management (IAM) replaces sensitive user information, such as usernames and passwords, with unique tokens that have no exploitable value outside the system. These tokens enable secure authentication and authorization processes by minimizing the exposure of actual credentials during access requests. Implementing tokenization reduces the risk of data breaches and enhances compliance with security standards like GDPR and HIPAA.

Challenges and Best Practices in Data Tokenization

Data tokenization in security faces challenges such as ensuring token uniqueness, managing token vault security, and maintaining system performance under high transaction volumes. Best practices include implementing robust access controls, regularly auditing tokenization processes, and utilizing format-preserving tokenization techniques to balance data usability and protection. Organizations must also prioritize seamless integration with existing workflows to minimize disruptions while enhancing data security.

example of tokenization in data protection Infographic

samplerz.com

samplerz.com