Natural language watermarking in AI safety involves embedding subtle, human-readable signals within generated text to verify authenticity and trace content origins. These watermarks consist of specific word choices, syntactic patterns, or phrase structures that are unlikely to appear in non-watermarked text, allowing for detection without altering the overall meaning. This approach helps prevent misuse of AI-generated content by enabling developers to identify and attribute outputs accurately. Watermarking techniques leverage entity recognition and data patterns to create unique linguistic fingerprints that align with a model's training and operational parameters. The embedded watermarks support data privacy and security by ensuring traceability without compromising user confidentiality. By integrating these methods, AI safety frameworks can monitor and control the dissemination of AI-generated information effectively.

Table of Comparison

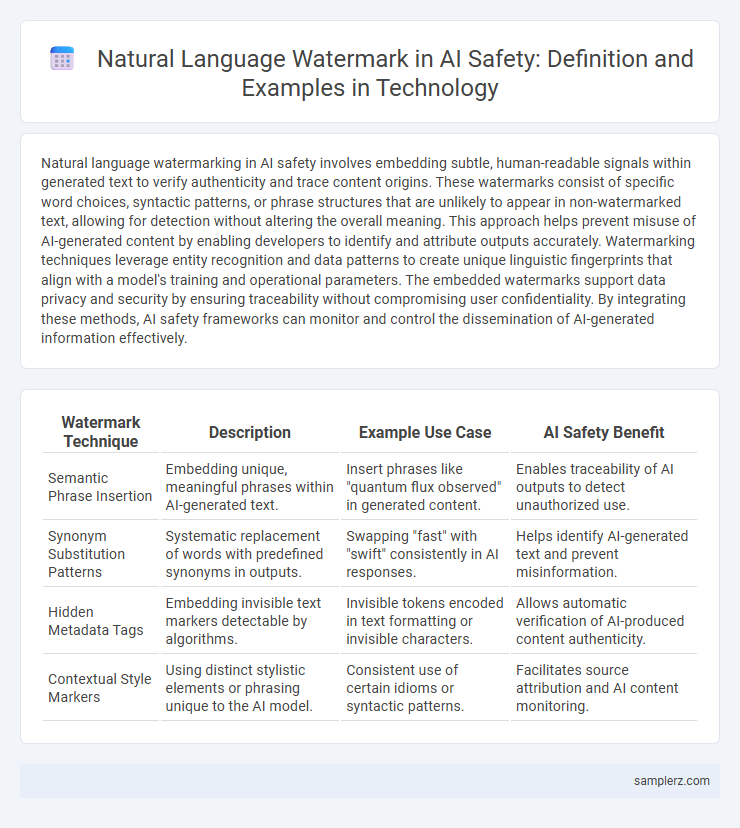

| Watermark Technique | Description | Example Use Case | AI Safety Benefit |

|---|---|---|---|

| Semantic Phrase Insertion | Embedding unique, meaningful phrases within AI-generated text. | Insert phrases like "quantum flux observed" in generated content. | Enables traceability of AI outputs to detect unauthorized use. |

| Synonym Substitution Patterns | Systematic replacement of words with predefined synonyms in outputs. | Swapping "fast" with "swift" consistently in AI responses. | Helps identify AI-generated text and prevent misinformation. |

| Hidden Metadata Tags | Embedding invisible text markers detectable by algorithms. | Invisible tokens encoded in text formatting or invisible characters. | Allows automatic verification of AI-produced content authenticity. |

| Contextual Style Markers | Using distinct stylistic elements or phrasing unique to the AI model. | Consistent use of certain idioms or syntactic patterns. | Facilitates source attribution and AI content monitoring. |

Introduction to Natural Language Watermarking in AI Safety

Natural language watermarking embeds subtle, identifiable patterns within AI-generated text to verify authenticity and prevent misuse. This technique enhances AI safety by enabling detection of synthetic content and mitigating risks like misinformation or unauthorized replication. Effective watermarking relies on linguistic features that remain robust across various text transformations while maintaining natural readability.

Key Principles of AI Safety and Information Integrity

Natural language watermarking in AI safety employs embedding subtle, verifiable markers within AI-generated text to ensure authenticity and traceability, reinforcing information integrity. This technique aligns with key principles of AI safety by promoting transparency, accountability, and resistance to manipulation in AI outputs. Effective watermarking supports detection of unauthorized content use, thereby mitigating risks associated with misinformation and preserving trust in AI systems.

How Natural Language Watermarks Work

Natural language watermarks embed subtle, algorithmically generated patterns within AI-generated text that are imperceptible to human readers but detectable by specialized algorithms. These watermarks leverage linguistic features such as syntactic structures, word choice distributions, or token frequency variations to create a unique, traceable signature. By enabling the identification of AI-authored content, natural language watermarks enhance accountability and reduce misinformation risks in AI-generated outputs.

Popular Methods for Embedding Watermarks in Text

Popular methods for embedding natural language watermarks in AI-generated text include synonym substitution, where specific words are replaced with their less common synonyms to create a detectable pattern without altering readability. Another approach involves controlled sentence rephrasing that maintains semantic meaning while encoding hidden signals identifiable by verification algorithms. These techniques ensure AI safety by enabling traceability and authenticity verification of generated content in various applications.

Case Study: Detecting AI-Generated Content with Watermarks

Natural language watermarks embed subtle patterns within AI-generated text to enable reliable detection of synthetic content, enhancing AI safety protocols. In the case study of detecting AI-generated content, these watermarks utilize token distribution shifts that resist removal or alteration by adversarial attacks. This approach ensures traceability and authenticity verification, crucial for mitigating misinformation and maintaining trust in AI-driven communications.

Real-World Applications of Language Watermarking for AI Safety

Natural language watermarking in AI safety is employed to trace the origin of generated content, ensuring accountability in automated text creation. In real-world applications, watermarking techniques embed subtle, invisible patterns within AI-generated language to detect manipulation or unauthorized replication. These methods enhance trustworthiness in sectors like news media, legal documentation, and online content moderation by preventing misinformation and intellectual property misuse.

Challenges in Designing Robust Natural Language Watermarks

Designing robust natural language watermarks in AI safety faces considerable challenges such as maintaining imperceptibility while ensuring reliable detection amid diverse linguistic contexts. Another critical issue is avoiding degradation of the AI-generated text's coherence and fluency, which can impact user trust and system performance. Furthermore, resilience against adversarial attacks, including paraphrasing and text obfuscation, remains a major hurdle in developing effective watermarking techniques.

Ethical Considerations in AI Watermark Deployment

Natural language watermarks in AI systems embed subtle, context-aware markers within generated text to ensure model accountability and traceability while preserving linguistic integrity. Ethical considerations in AI watermark deployment emphasize transparency, user consent, and avoidance of bias to maintain trust and prevent misuse. Implementing these watermarks requires balancing detection precision with respect for privacy and freedom of expression.

Evaluating the Effectiveness of Watermarks in Preventing Misinformation

Natural language watermarks in AI safety embed subtle linguistic patterns within generated text to trace and verify content authenticity, effectively deterring misinformation. Studies show that these watermarks maintain high detectability without compromising the readability or utility of AI outputs, supporting robust content verification. Evaluation metrics emphasize the balance between watermark robustness and imperceptibility, ensuring reliable identification while preserving natural language quality.

The Future of Natural Language Watermarking in Safe AI Systems

Natural language watermarking in AI safety enables traceable, tamper-proof identification of AI-generated content, enhancing accountability in automated systems. Advances in embedding robust, imperceptible linguistic patterns allow detection of manipulated outputs while preserving semantic integrity. This evolving technology promises crucial safeguards in mitigating misinformation and ensuring transparency in future AI deployments.

example of natural language watermark in AI safety Infographic

samplerz.com

samplerz.com